Second only to the speed of the processor, memory subsystems design dominates a server’s performance. The three traditional metrics of Latency, Bandwidth and Power Management are no longer enough to categorize the performance of modern Server memory subsystems. Memory channels are increasing in speed and the protocol is becoming more complex, the latest being the JEDEC standard DDR4. As such, new metrics need to be employed to understand and categorize memory subsystem traffic. By studying the complex, and what is assumed to be random, traffic patterns, we can begin to design and architect the next generation of memory subsystems and servers. To do this we need to employ some advanced methods of analysis. The methods employed in the past use a traditional logic analysis approach where only small fractions of a microsecond of memory bus traffic are captured with minutes of dead time between those snapshots trace the cycle by cycle traffic. The resulting traces are then off loaded and an extensive post processing to glean performance metrics is performed. This process is expensive due to several reasons: logic analyzers that operate at these speeds are easily in excess of US$150,000 and deep trace depth is expensive and in some cases add an additional US$50,000 to the cost of the analyzer. After the data has been acquired there is the manpower to write the software that performs the analysis. In addition, this method is incomplete as the vast majority of traffic is not analyzed due to the inadequate trace depth and dead time between acquisitions. Thus a new method needed to be derived. That method is the use of high speed counters inside an FPGA. The system can be probed using traditional means of a DIMM interposer, probing only the Address/Command and Control signals, and the logic analyzer replaced with a specialized unit that counts all of the metrics that would normally be gleaned from the terabytes of traced data. Thus no trace memory is needed and the counters provide greatly improved results at a fraction of the cost and can provide the metrics for longer periods of time. Such a product is now commercially available and is called the DDR Detective® from FuturePlus Systems. To illustrate the metrics described we used the DDR Detective® in an ASUS X99 DDR4 based system. A single 8GB 2 Rank DIMM was instrumented using a DDR4 DIMM interposer . The clock rate for the channel was 1067 MHz which results in 2133 MT/s (mega transfers per second). The x86 Memtest was run in order to create memory bus traffic.

What Memory bus signals need to be monitored

DDR memory busses since the original DDR and now up to DDR4 have similar architectures and protocol. Each successive generation has increase protocol complexity and speed, however one thing has remained constant and that is that the Address, Command and Control signals are the only ones that need to be monitored in order to glean the important performance metrics. The double data rate DQ signals and associated DQS strobes indeed carry important information, but that information occurs at a predictable rate and time via the protocol determined by the Address, Command and Control signals. In addition, the data carried on the DQ data signals is not recognizable as it is encoded for signal integrity and in many cases the bits are rearranged out of order for ease of motherboard signal routing. So from a performance perspective it is not important to know the value of the DQ data signals, it is only important to know how often these signals are valid and for how long.

Traditional Memory Performance Metrics

Unfortunately there are no industry standards that define computer memory performance metrics but there are three commonly used ones and they are: Bandwidth, Latency and Power Management. The last one becoming increasingly more dominate as data centers try to reduce their power consumption. In a recent posting it was explained that a savings of a single watt per server can add millions of dollars to Facebook’s bottom line. Since DDR4 has extensive power management features built into its protocol, Power Management is one reason supporters of DDR4 tout its superiority over the previous generation DDR3.

Bandwidth

The most easily recognizable and most common metric used in describing performance of computer memory is Bandwidth. Per Webster’s Dictionary it is:

For DDR4 memory there are two ‘busses’: Address/Command and Control abbreviated A/C/C and the DQ/DQS double data rate data bus simply referred to as the Data Bus. However it is only the Data Bus that determines useful Bandwidth since the A/C/C is merely overhead that facilitates not only the direction, Read or Write, but the maintenance commands for the DRAM.

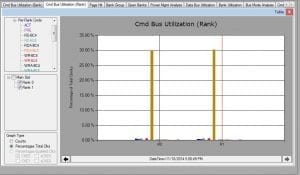

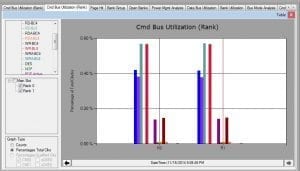

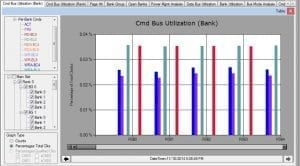

Command Bus Utilization Analysis

The objective of the memory bus is to transfer Data and as such the entire Command bus can be seen as overhead. Due to the required spacing between commands and the maintenance commands required by the dynamic nature of DRAM, the Command bus is never more than approximately 35% utilized and the vast majority of that is taken up by Deselects. To get a clear picture of the Command bus and the overhead that it represents this analysis needs to cover all DDR4 commands (24 specific types), broken down by Rank and Bank and by Channel and shown as percentages (used cycles versus total cycles, or versus CKE[1]qualified cycles).

WHY Measure this?

- To identify system hot spots.

- Verify the traffic is what you would expect given the software you are running.

- To look for write optimization (Writes much faster than Reads).

- To see if infrequent transactions are ever occurring.

- To verify diagnostics (ex. Is a memory test really covering all banks and ranks equally?).

Below is an example of Command Bus Utilization taken from our DDR4 target system.

[1] CKE is clock enable indicating that the clock is not valid unless this signal is true.

So in summary understanding the commands and how they are distributed across the memory bus for the various workloads can give insight into better software and hardware design. For our next post we will take a look at Data bus bandwidth.